Data Engineering Bootcamp

This program offers hands-on experience in Data Engineering, you will delve into designing data architectures, building sophisticated databases and automated data pipelines, and data operations (DataOps) in the popular Microsoft Azure cloud architecture including Apache Spark, Apache Airflow, Kafka and Microsoft Power BI. By mastering modern data engineering tools, You’ll efficiently build end-to-end real-time analytics projects, handling everything from ingesting and cleaning both structured and unstructured data to managing and monitoring data pipelines.

Start your journey to a global career in Data Engineering! Gain hands-on skills and become an industry-ready professional

03 Months

Saturday, Sunday

12:00 - 2:00 PM PKT

08 November, 2025

Online & Interactive

English

What You’ll Learn

- Understanding of Databases, Data Warehouses, Data Lakes & Data Marts.

- Difference between Structured, Semi-Structured and Unstructured Data and how to process these respectively.

- Understanding of CDC (Change Data Capture) & SCD (Slowly Changing Dimensions) Types, their uses and functional capabilities.

- Batch Data vs Stream Data

- Difference between ETL (Extract Transform Load) vs ELT (Extract Load Transform) concepts which streamline the basics of Data Engineering, their uses based on requirements. We will be creating both ETL & ELT pipelines using MYSQL, Python, PySpark and ETL & ELT Tools such as Talend, Airflow and Kafka.

- Understanding of OLAP & OLTP Methodologies and their situational uses

- Importance of Dimensional Modelling and Differences between Star vs Snowflake Schemas

Python Essentials:

- Data Types & Variables including Primitive Types (int, float, str, bool etc)

- Type Conversions (str(), int())

- Dynamic typing

- Arithmetic, Comparison, Logical, Assignment and Bitwise Operators

- Understanding of Control Flow using Conditional Statements (if, else, elif), Loops (for, while), Loop Control (break, continue, pass)

- Defining Functions (def), Functional Arguments (positional, default, *args, **kwargs), Return Values, Anonymous Functions (lambda)

- Python in the light of Data Structures (Creating, Slicing, Appending, Removing, Iterating, Sorting)

- Tuples, Dictionaries & Sets

- String Manipulation using String Methods (upper(), lower(), split(), replace()) and String Formatting (f-strings, .format())

- Error Handling (try, except, finally) & Raising Exceptions (raise)

- Libraries in Python

Python in Data Engineering:

- Data Extraction and Data Manipulation by working with various File Formats

- Using Numpy for Numerical Operations

- Database Interaction using mysql-connector, pymysql and other modules

- Use of CRUD Operations

- Using APIs for Requests & Responses

- Data Transformation using Pandas

- Data Loading into Snowflake Database

- Logging for tracking Pipeline Execution

- Connecting Python to Big Data Tool like PySpark

- Reading Data from Cloud Storage

- Working on Realtime Data using Python and Kafka

Case Study & Project:

Create an ETL/ELT Pipeline using Dataset of Netflix, including TV Shows, Movies and Documentaries information.

Use Pandas and Snowflake Connector Libraries in Python for extraction, transformation and loading of data into Snowflake. Use Numpy to assign weights and for analysis.

MySQL Fundamentals:

- Role of MYSQL in RDBMS

- ACID Properties

- Data Types in MYSQL e.g. Numeric (int, float, decimal), String (varchar, text) and Date/Time (date, datetime, timestamp)

- Constraints (Primary Key & Foreign Key), NOT NULL, UNIQUE, DEFAULT and AUTO_INCREMENT

- Query Writing using CREATE, DROP, SELECT, INSERT, UPDATE, DELETE

- Use of Various Clauses (WHERE, HAVING, UNION, LIKE, BETWEEN, IN, ORDER BY, LIMIT) and Joins (INNER, LEFT, RIGHT, FULL OUTER)

- Aggregate Functions (COUNT, SUM, AVG, MIN, MAX) & Window Functions (ROW_NUMBER, RANK, SUM OVER, LEAD, LAG)

- Creating Functions or Stored Procedures

- Subqueries and Nested Joins

- Indexing and Partitioning

- Views

- Logging & Error Handling using EXPLAIN Clause and Error checking Queries

Advanced Data Engineering in MySQL:

- Normalization Forms (1NF, 2NF, 3NF), Schema Designs and Dimensional Modelling (SNOWFLAKE and STAR Schemas)

- Use of Common Table Expressions (CTEs) using WITH Clause

- Transactions (BEGIN, COMMIT, ROLLBACK)

- Stored Procedures, Functions (User Define Functions (UDFs)) and Triggers (for Automation, BEFORE and AFTER Triggers)

- Optimization Strategies including Index Optimization (Composite Indices, Covering Indices) and EXPLAIN Clause for Analyzing Query Logs and Improving Performance

- Horizontal Partitioning for Large Datasets

- ETL with MYSQL using Load Data Infile for Bulk Imports

- Horizontal Scaling using Database Sharding

- The Three Vs (Volume, Velocity & Variety)

- Transforming, refining, and improving raw datasets.

- Managing incomplete data, de-duping, adjusting data types, and ensuring consistency.

- Organizing data through reshaping and pivoting operations.

- Efficiently merging and integrating diverse datasets.

- Extracting and processing information from JSON, XML, and other unstructured formats.

- Importance of Cloud Platforms including Snowflake, Azure & AWS.

- Difference between On-Perm & Cloud Platforms

- Data Storage & Analytics on Cloud

- Dive into Basic Cloud Services of AWS and Azure

- Scalable & Efficient Data Engineering on Cloud

Azure Cloud Case Study & Project:

Create a Cloud based Real-Time Data Pipeline that collects sensor-data, processes it and saves it for analysis such as generating real-time insights by triggering alerts. The goal of this case study is to understand the importance of cloud services and the role they play in Big Data.

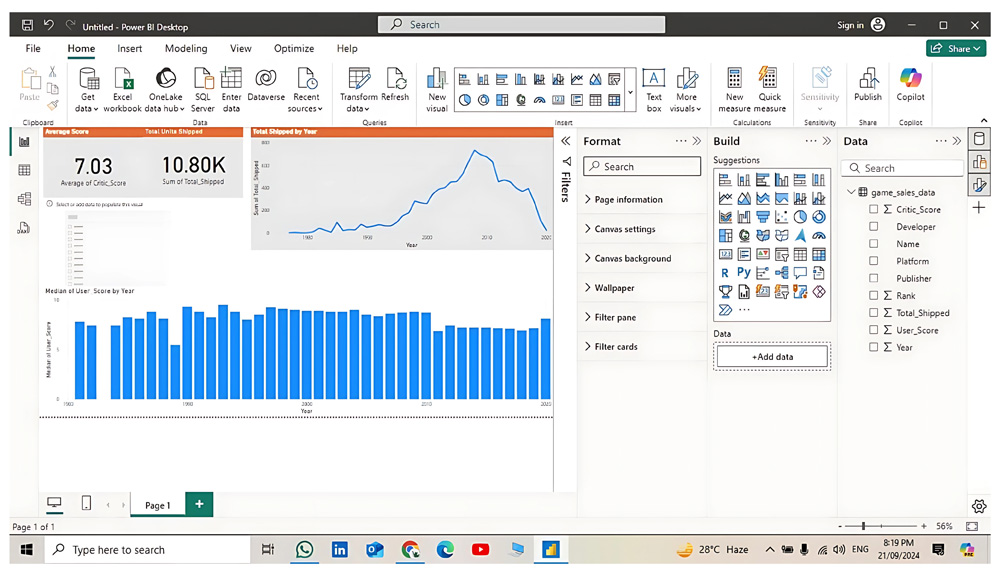

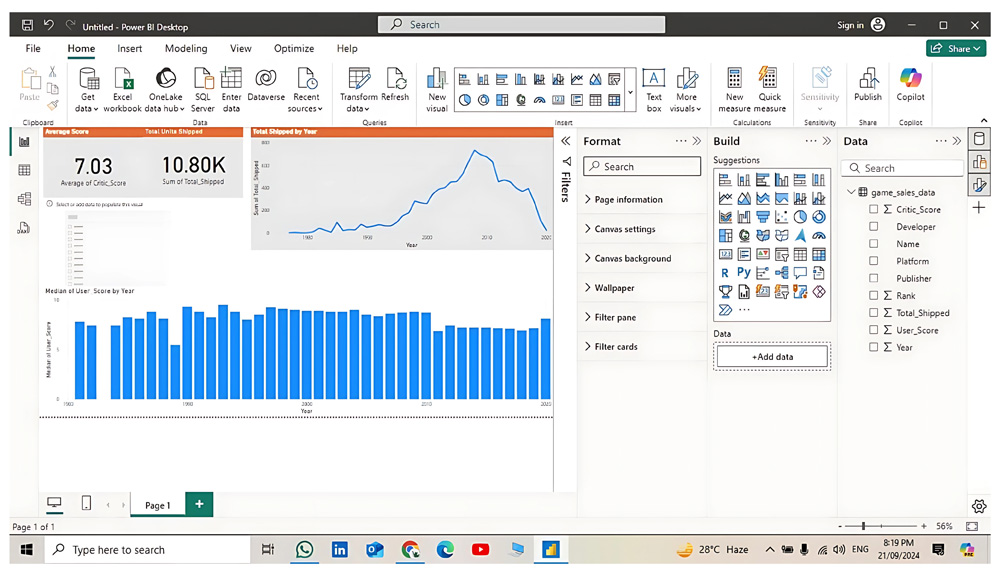

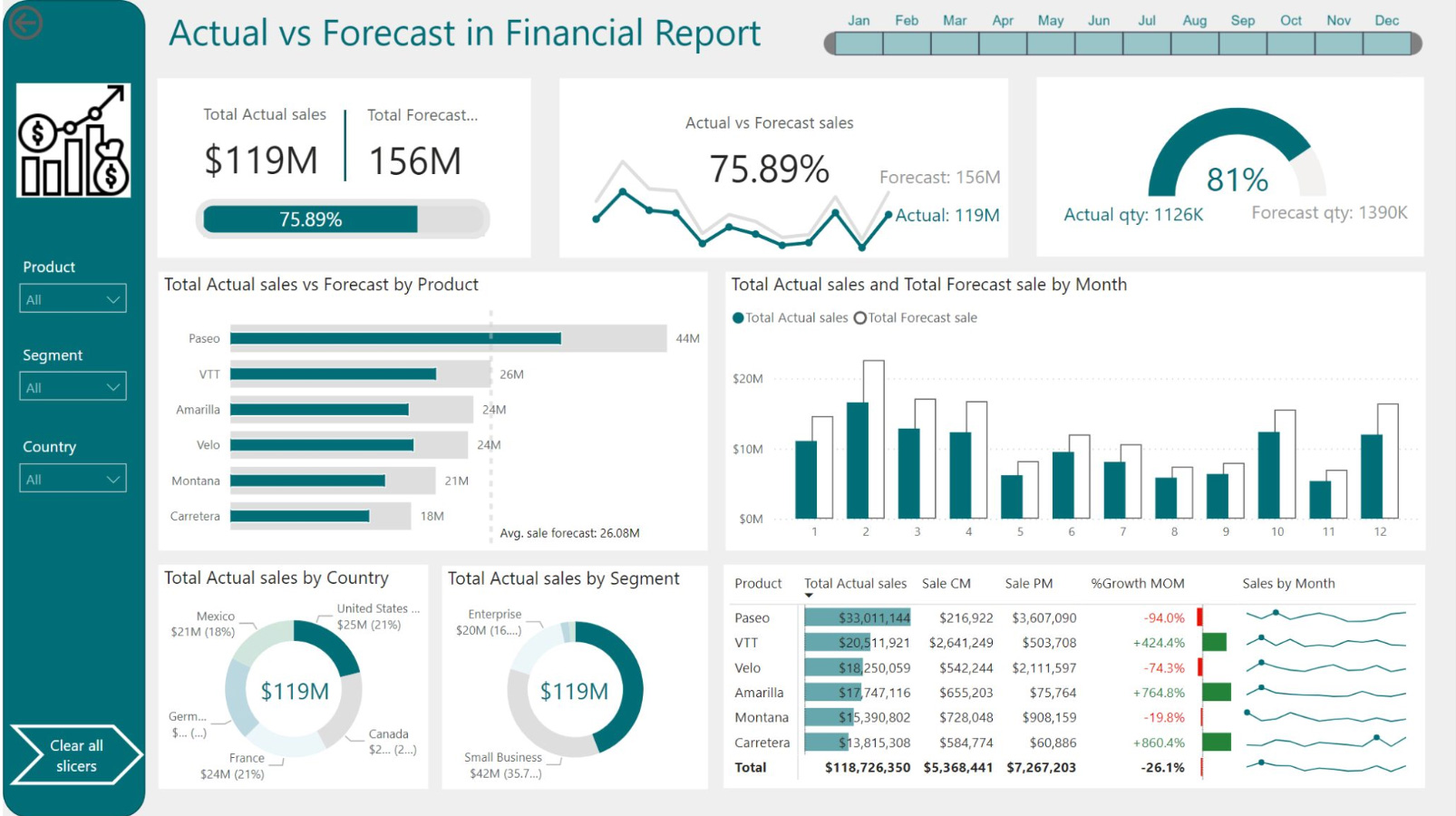

- Integrate BI tool with Different Data Sources

- Importance of Data Visualization Tools.

- Concepts of Tableau and PowerBI in Data Visualization.

- Types of different charts for data visualization

- Creating interactive dashboards and reports

Case Study and Project:

Create an attractive Dashboard on Tableau/PowerBI to display trends in Data.

This case study will help students learn about integrating Tableau with Snowflake Database, Data Preprocessing, choosing the Right Visualization and how one can create an Interactive Dashboard to present.

- Stream Processing Architecture

- Event driven Systems and Message Queues

- Data Ingestion including Capturing and Publishing data in real-time

- Stateful vs Stateless Processing

- Components in a Real-Time Data Pipeline including Data Sources (sensors, social media, databases etc), Data Processing (stream transformations, aggregations, filtering, windowing), Data Storage (streaming data stores like Apache Kafka topics or real-time databases), Alerts and Downstream Systems

- Importance of Tools like Apache Spark, Apache Kafka Apache Flink and AWS Kinesis in the world of Real-Time Streaming Data.

Case Study and Project using Apache Kafka:

Create an ELT Pipeline for real-time analytics system which is also good for monitoring and in carrying out alerts.

We will use streaming data in this case study, manipulate and process it as needed and will understand its purpose in the world of Big Data. It will be an interesting break through in the world of analytics and validation as well.

- Importance of Pipeline Automation & Scheduling

- Understanding Logs for Error Detection in Airflow

- Understanding of Queued Data in Airflow

- What is a DAG?

- Dive into the importance and deep explanation of Multiple Task Operators we create within a DAG file

- Tasks’ Execution Flow including (Upstream and Downstream)

Case Study using Airflow and PySpark:

Create an ETL Pipeline for Batch Data or ELT Pipeline for Streaming Data.

We will use Airflow Scheduler and Big Data API like Pyspark using Python to create our Pipeline. We will understand the basics of these tools and task operators by creating an Airflow DAG File. We will automate our data pipeline in Airflow Scheduler and monitor its logs on the Airflow Portal.

- At the end of each module, Trainer will mentor students to the best of capacity, so they understand both their weak and strong points

- Students will come up with multiple ideas from real world examples for their respective capstone projects

- Out of the ideas, collaboratively will decide on one Usecase which will be the mega

- Create a Problem Statement and gather requirements around that Usecase. While creating the Project, they will document every technical aspect that they covered in their work, respectively.

- How to crack interviews and start career as a Data Engineer and Analytics

- Special mentoring sessions from global Industry leaders from Amazon, Microsoft, Nike, Jazz and more.

Tools you will learn

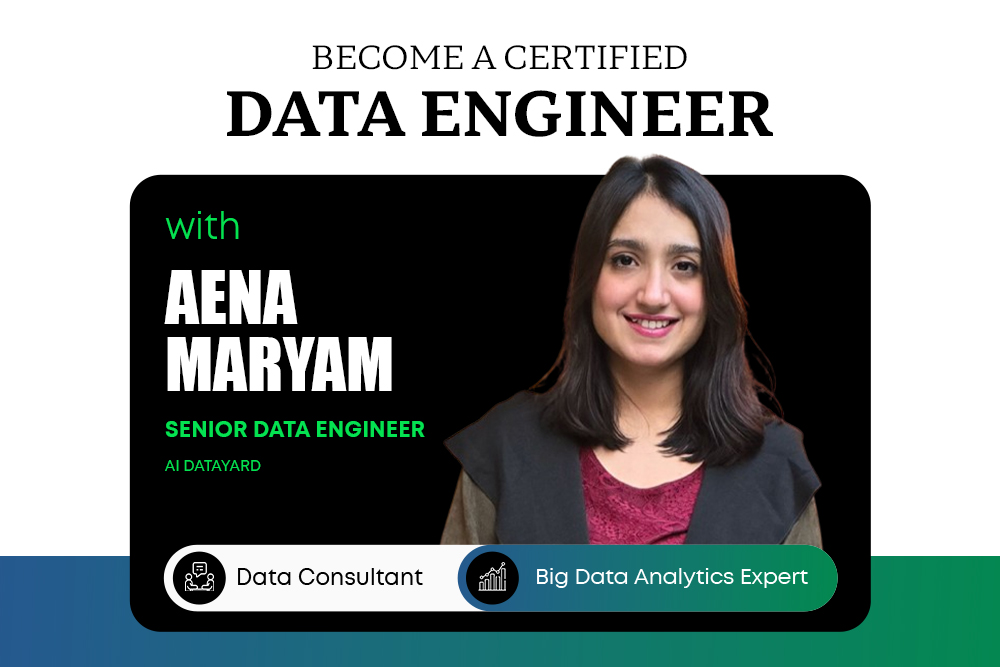

Meet your Instructor

Aena Maryam

Senior Data Engineer | Data Architecture | BigData Analytics | Snowflake Cloud | Airflow | PySpark | 4.5 Years of Impactful Data Solutions

Aena is a Senior Data Engineer with 4.5 years of experience in designing and optimizing global ETL, DA, BI, and DWH solutions. She has strong hands-on expertise in data wrangling, transformation, analysis, and managing end-to-end Real-Time Analytics Projects.

At Afiniti, I built projects from scratch, loading data, performing complex analysis, and automating solutions using DBT and Snowflake. With experience in both multinational corporations and startups, I understand the challenges newcomers face in data engineering and analytics.

Who This Program is For?

Executives

Leaders who want to master data insights to drive smarter business decisions

Professionals

Those looking to level up their career with impactful data skills

Students

Who want to get trained practically in Data Engineering and Analysis with a complete industry-oriented approach

Data Enthusiasts

Individuals across industries like Supply Chain, Finance, Oil & Gas, Healthcare, and Sales who want to use data to solve real-world challenges

Trusted by Leading Companies

HOW DOES THE PROGRAM WORK

Interactive Live Sessions

Learn from top data industry leaders with in-depth, industry-relevant mentorship

Hands-On Training

Master analytics tools through 100% practical, real-world training

Hands-On Training

Master analytics tools through 100% practical, real-world training

Capstone Projects & Case Studies

Complete continuous tasks, capstone projects, and case studies to ensure you can apply skills in the industry

Solid Profile Building

Focus on building a strong profile and personal brand to help you stand out in the job market.

Solid Profile Building

Focus on building a strong profile and personal brand to help you stand out in the job market.

BootcampInvestment

Become a Certified Data Analyst and Drive Business Growth Like Never Before!

BootcampInvestment

Become a Certified Data Engineer and Future-Proof Your Career!

standard

PAKISTANNATIONALS

Lump-sum

PAKISTANNATIONALS

Lump-Sum

INTERNATIONAL PROGRAMEARN A CERTIFICATE

Certify Your Expertise

Get certified and build a powerful profile that attracts employers who trust skills from globally recognized platforms

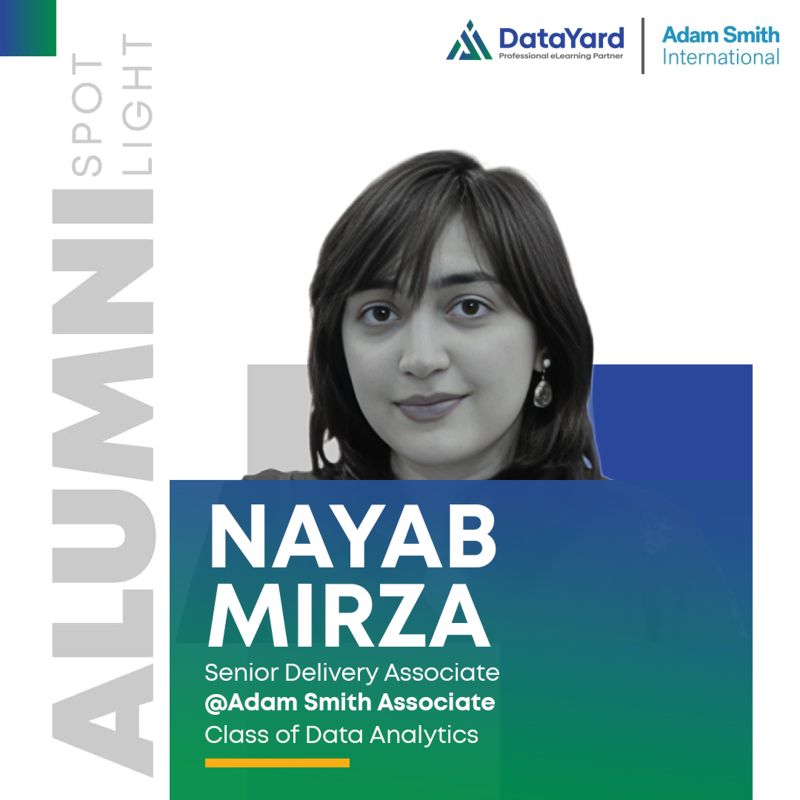

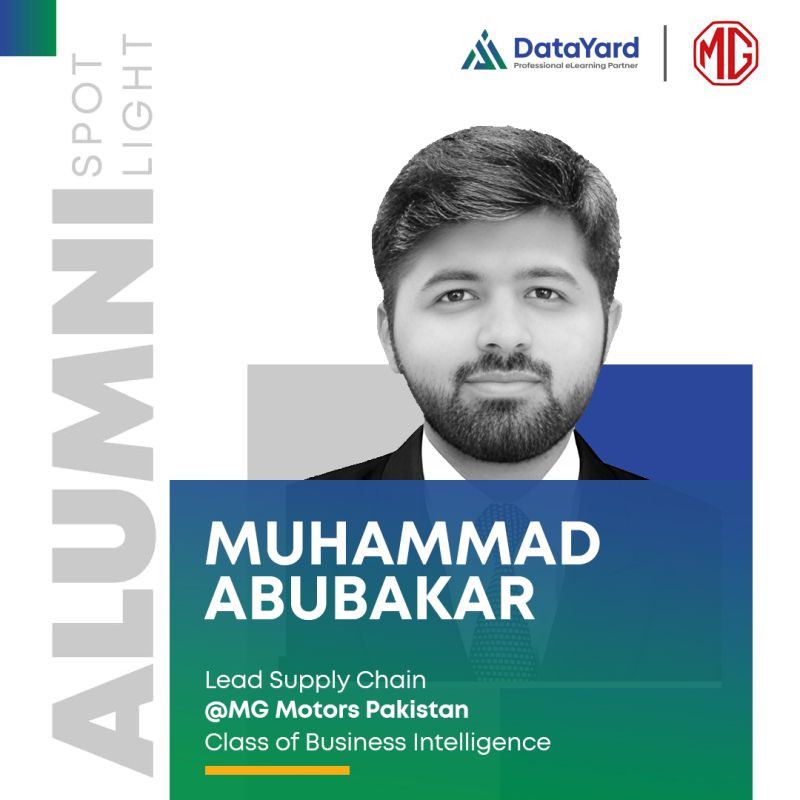

Real Stories. Real Impact.

Alumni Stories of Growth and Success